Is your organization thinking about integrating artificial intelligence (AI) into the workplace? You certainly wouldn’t be alone as businesses in all industries are looking to this revolutionary technology for increased speed, efficiency, and productivity while helping to control costs and boost bottom lines.

Just how popular is AI becoming today? Well, the technology appears to be growing in leaps and bounds with no signs of slowing if you look at recent numbers.

According to the latest McKinsey Global Survey, one-third of survey respondents say their organizations are using generative AI regularly in at least one business function. 77% of devices in use today use some form of AI.The future looks quite promising too—the International Data Corporation (IDC) predicts worldwide spending on AI-centric systems will pass $300 billion by 2026.

Despite the excitement building around AI, integrating machine intelligence and generative artificial intelligence into your business isn’t all upside. If your organization chooses to bring this budding technology on board, there are significant risks to consider. Keep in mind AI has the potential to cause significant harm to individuals, companies, communities, and society, so setting up a system of governance is critical for your organization’s ethical and responsible AI deployment.

AI governance will establish the rules of the game for your machine learning and generative AI initiatives and needs to be mandated by senior leadership. Governance guidelines must outline the best practices for operating ethically, ensuring fairness, respecting privacy, creating transparency, providing safety and security, and building trust around your organization’s AI system.

As a technology leader, how can you start to develop responsible AI policies and procedures, educate everyone working on AI projects, and embed a culture of responsibility in your organization? That’s what this article will discuss.

AI Governance and Why it’s Necessary

AI has immense transformative power for your business. It can produce positive ends–like speeding up manual processes, boosting business productivity, and improving your bottom line–or it can bring significant harm to individuals and organizations.

Consider the following news headlines:

“Online education platform’s recruiting AI rejects applicants due to age”

“ChatGPT hallucinates court cases”

“Healthcare algorithm failed to flag Black patients.”

“Amazon’s AI-enabled recruitment tool only recommended men”

These are all unfortunate examples of AI deployments failing to perform as the creating organization intended, and resulting in unfair and potentially damaging outcomes.

Ultimately, the outcome of your organization’s deployment may well depend on what type of governance structures you have in place. Keep in mind your organization’s governance must be a holistic structure of operating principles, policies, guidelines, and procedures designed to oversee projects, ensuring alignment with business objectives and compliance with existing regulations. Without governance, your organization’s AI initiative can fall victim to many risks and unintended consequences which can have your company in the news for all the wrong reasons. These potentially damaging risks include:

Information security issues- When your organization begins integrating AI, be prepared for many new information security risks. Access threats such as insecure plugins, permissions issues, and excessive agency can victimize AI systems, especially those employing large language models (LLM). These access threats bring problems—unauthorized code execution or chatbot manipulation, cross-site and server-side request forgeries, indirect prompt injections, and malicious plugin usage–all of which can threaten your company’s network integrity, data security, and brand reputation.

Threat actors may target your organization’s data directly. Attackers look to exploit supply chain vulnerabilities and prompt injection opportunities, which can cause your systems to expose personal information or perform harmful actions. Leaking private data can open your organization to regulatory infractions and the painful financial and legal penalties that can follow. Attackers can insert poisoned data into training models, resulting in skewed outputs, network breaches, and system failures. Denial of service attacks can overwhelm data systems, often resulting in the loss of user access and excessive costs.

When your organization begins integrating AI, be prepared for a significant number of new information security risks. AI systems using large language models (LLM) can be victimized by access threats such as insecure plugins, permissions issues, and excessive agency. These access threats bring problems—unauthorized code execution or chatbot manipulation, cross-site and server-side request forgeries, indirect prompt injections, and malicious plugin usage–all of which can threaten your company’s network integrity, data security, and brand reputation.

Your organization’s data may be targeted directly. Threat actors look to exploit supply chain vulnerabilities and prompt injection opportunities which can cause your systems to expose personal information or perform harmful actions. Leaking private data can open your organization up to regulatory infractions and the painful financial and legal penalties that can follow. Attackers can insert poisoned data into training models, resulting in skewed outputs, network breaches, and system failures. Denial of service attacks can overwhelm data systems, often resulting in the loss of user access and excessive costs.

Generative AI can also allow threat actors to raise the bar with increasingly sophisticated attack methods. This technology will enable attackers to swiftly and discreetly compromise AI activity with corrupted data and expertly disguise phishing attacks, making them nearly impossible to detect.

Bias and discrimination- When models are trained with biased data, they can produce unfair and discriminatory outcomes in critical areas like hiring, lending, insurance, and law enforcement which can, unfortunately, take a human toll.

Privacy invasion- AI models, especially those trained on sensitive data such as a cancer detector trained on a dataset of biopsy images and deployed on individual patient scans, and those using facial recognition, can violate personal privacy laws if they weren’t developed with appropriate data masking and privacy procedures.

Lack of explainability (or interpretability)- When your AI model makes automated predictions or recommendations in high stake domains such as job applicant evaluation, explainability, or the level to which we can question, understand, and trust that AI system, is crucial. When your systems make decisions “in a black box”, people naturally mistrust and resist system adoption.

Unauthorized data usage- Be forewarned–certain types of models will require an immense amount of training data–and this opens up the risk of using proprietary, sensitive or copyrighted information. Even though often accidental, any unauthorized use of such data can lead to costly intellectual property (IP) lawsuits or compliance violations for your organization.

Patent and IP challenges – Developments in the brave new world of AI raise challenges for the patent system. Patent laws emerged to protect human inventors who develop creative, useful inventions and share them with the public. The ability of AI to partially automate the inventive process raises questions about how to apply patent laws to inventions developed with assistance of the technology.

For instance, is it clear who owns the content that generative AI platforms create for you, or your customers? Is an invention created using assistance from an AI system patentable? And, if so, by whom?

Patents are just one type of intellectual property, which can also include copyrights, trademarks, industrial designs, and trade secrets. Intellectual property (IP) laws protect all types of intellectual property and model algorithms can unknowingly violate these laws by using protected content, resulting in costly lawsuits and innovation barriers for your organization.

AI-generated content- Would you like to know when you’re reading content created by a machine? Many people do want to know when they’re reading AI generated content and decide whether to trust it or follow its advice.

Trade secret disclosure- Generative AI apps can pose a unique threat to a company’s trade secrets. These apps capture and store their inputs to train their models. Once captured, the information input into those apps sometimes cannot be deleted by the user, may be used by the app, and may be accessed by the company which created the app. If employees put their company’s trade secret into an AI prompt, that trade secret could be at risk of losing its protection.

Source code theft- Your software source code contains all your business’s crown jewels–personal and financial info, trade secrets, intellectual property, and documents detailing the company’s inner workings. If this software code fell into the wrong hands, it could result in intellectual property theft with significant financial and reputational harm to your business. Source code theft can result from outsiders exploiting insecure plugins or unauthorized permissions to breach systems and steal code–or it can be an inside job by a rogue employee, but either way, valuable business assets can be compromised.

AI Governance Frameworks

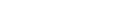

AI governance frameworks provide the necessary principles, structures and processes to mitigate the many risks of adoption.

First, governance must include human oversight. In fact, proposed AI regulations require this. According to the EU’s proposed AI Act, “Human oversight shall aim at preventing or minimizing the risks to the health, safety or fundamental rights that may emerge when a high-risk AI system is used”. That means establishing controls for humans to view, explore and calibrate AI system behavior.

We strongly recommend that your executive leadership team formally assign AI governance responsibility to a team or group of people in your organization. These are the people who are ultimately accountable for the ML model or GenAI’s outputs and are there to set up structures to detect and respond when systems act in unexpected ways.

Your team’s AI model risk management framework must proactively address potential stumbling blocks like bias, discrimination, and lack of explainability by embedding ethical principles into the design phase.

If your models leverage sensitive data, you need to understand data protection legislations you must comply with and the issues that will come up as a result. For instance, if you must comply with GDPR’s right to be forgotten, your organization needs a way to delete all of a user’s personal data from all of your systems including the data that’s in your machine learning pipeline. To do that, you need to have a mechanism to detect sensitive data coming into your systems.

Modern data science and AI teams need to understand a number of strategies they can pursue in the face of challenging data privacy requirements, such as deleting the dataset, masking data, storing only IDs which can be used to reconstruct data, updating your privacy policy and obtaining full client consent and others. They need to find the appropriate balance between compromising privacy and compromising model performance.

Information security is another important aspect of AI governance. AI adoption can expose your business to information security risks, including access vulnerability, data manipulation, privacy violations, increasingly sophisticated attacks, and the reputational damage resulting from the failure to secure your data. Your organization may need to mandate strict data protection measures like secure access control, data encryption, and keen monitoring systems to prevent privacy invasion, unauthorized data usage, trade secret disclosure, and source code theft.

Remember, your risk management framework must also include legal checks and audits to prevent IP violations, including any issues with patents or AI-generated content. It’s excellent practice for your team to maintain up-to-date knowledge of the continuously evolving IP laws and regulations to avoid problems.

In addition to legal checks and audits, governance frameworks must ensure the explainability and interpretability of AI decision-making by doing everything possible to remain transparent and accountable. They need to consider what disclosures you need to make. Transparency is critical for solidifying your stakeholders trust, especially in areas having a significant human impact.

The Role of Centers of Excellence (COE) in AI Governance

As we mentioned earlier, organizations that want to move forward with AI adoption need to establish accountability and governance structures. If you don’t yet have a governing body for AI in your organization, now is the time to establish an AI Center of Excellence (COE) composed of experts in data management, data science, legal, ethics, cybersecurity, privacy, compliance and relevant business units. Gathering professionals from diverse practices provides a fuller picture of risk and helps ingrain ethical standards and accountability in project development and deployment.

It can be beneficial to think of your COE as a centralized hub of knowledge and guidance, sharing AI best practices and taking leadership in creating a responsible and ethical use culture. Your COE will serve an oversight role with responsibilities such as setting strategy alignment, ensuring projects meet regulatory and ethical standards, providing the education and training necessary for the responsible application of AI within your organization, and setting all-important project guardrails.

AI Governance is critical to build guardrails on dos and don’ts especially in the context of use and creation of data. AI is like a knife – it can be the best or the most dangerous tool depending on which side you decide to hold. In addition, governance can also emphasize ethical and responsible AI when the capability starts to flow through every vein of the company

By establishing an AI COE, your organization makes a statement—we care that our AI systems are transparent, operate ethically, and respect people’s privacy. And that kind of statement goes a long way toward building long-term stakeholder trust in a rapidly digitizing world.

Technology Supporting AI Governance

As AI expands its scope and impact across all industries, effective governance will become extremely important as organizations look to scale AI deployments.

But how can technology support governance and structures like AI COEs?

Emerging software tools, like Shibumi’s AI Value Accelerator, can play a significant role in the success of your AI COE and the future of AI governance. AI Value Accelerator can help your team drive alignment between your company strategy and its AI projects, scale your AI ideas pipeline, prioritize AI use cases based on a consistent methodology, enforce governance best practices, and automatically monitor the business benefits of AI deployments.

Parting Thoughts

In conclusion, governing your AI projects is a mandatory component of responsible and ethical AI development. As a technology or business leader, you must understand the need for AI governance, its requirements, and how to develop effective policies to ensure your organization’s future projects benefit the humans in and outside of your organization. Implementing such policies will undoubtedly present challenges, but organizations with the foresight to invest in AI governance today will enjoy the many business advantages of ethical AI deployment tomorrow.